Part01

Part03

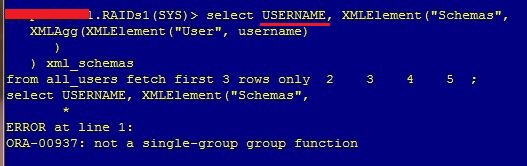

Starting from previous

output, running the following query

set lines 160

col SAMPLE_TIME for a30

set pages 99

select

sample_id

, session_id

, session_serial#

, time_waited

, count(*)

, sample_time

from

my_active_session_history

group by

sample_id

, session_id

, session_serial#

, time_waited

, sample_time

order by

session_id

, sample_id

/

you can aggregate the result. The output is

here.

I get an extract of this output to try to explain what happens.

What

my anonymous PL/SQL (see the

previous post) does, is to polling the V$SESSION_ACTIVE_SESSION every second and save the output in MY_ACTIVE_SESSION_HISTORY table.

Because each time, the script read the same rows plus one (the V$ASH is renewed each second), for the same sessions, you see a lot of rows for each loop

Consider, for example, the session 1732/serial 31065.

select

sample_id

, session_id

, session_serial#

, time_waited

, count(*)

, sample_time

from

my_active_session_history

where session_id=1732 and session_serial#=31065

group by

sample_id

, session_id

, session_serial#

, time_waited

, sample_time

order by

session_id

, sample_id

/

|

| Click for enlarge |

It appears 50 times for the snap_id 50243482, 49 times for the snap_id 50243483 and 48 times for snap_id 50243484 (see note).

What happens to the session 1732, where for the sample_id=50243484 you see two distinct rows?

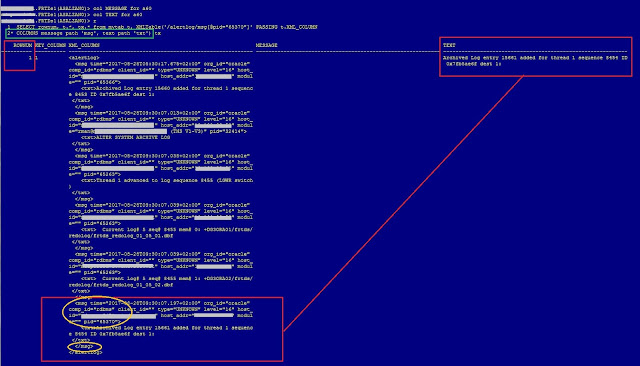

The following statement has

this output (it is just the detail of the session 1732):

select

row_number() over (partition by sample_id order by sample_id, inserting_time) rn

, inserting_time

, sample_id

, wait_time

, time_waited

, session_state

, event

from

my_active_session_history

where

session_id=1732 and session_serial#=31065

order by

sample_id, 1

/

Because I'm interested just in the first rows of each change of "sample_id" and/or change of the "state" inside the same sample_id, I want to see only this changes

with

mash as (

select

inserting_time

, time_waited

, sample_id

, sample_time

, session_id

, wait_time

, event

, session_state

, row_number() over (partition by sample_id, time_waited, session_state, event order by inserting_time) rn

from

my_active_session_history

where

session_id=1732 and session_serial#=31065

)

select

sample_id

, sample_time

, inserting_time

, time_waited

, session_state

, event

from

mash

where

rn =1

order by

sample_id

, inserting_time

/

|

| Click for enlarge |

What happens here?

- At sample_id =50243482 (14:50:40) the sid 1732 is working (it's ON CPU). This means that TIME_WAITED is 0 (zero)

- One second after (14:50:41), sample_id=50243483, the sid 1732 is still working and TIME_WAITED is still 0 (zero)

- One second after (14:50:42), sample_id=50243484, the sid 1732 wait on direct path read event, but the TIME_WAITED column is not updated. So while the SESSION_STATE/EVENT columns report the correct state of the session, the TIME_WAITED, not yet (inserting_time=15-DEC-17 02.50.43.192604 PM). Because of this Oracle update on the same SNAP_ID (50243484) this row with the correct value of TIME_WAITED column (inserting_time=15-DEC-17 02.50.44.372309 PM).

In the next session, I try to explain this "update" in a visual way

Note

Is it correct that each next sample_id, have one less? When my PL/SQL script inserts a row the first time, it does at 15-DEC-17 02.

50.40.833420 PM (sample_id=50243482). The second time, it inserts at 15-DEC-17 02.

50.42.016313 PM (sample_id=50243483): it inserts two seconds after the first time. The third time it inserts at 15-DEC-17 02.

50.43.192604.

Anyway, the last record for all samples is the same: 15-DEC-17 02.

51.40.488761 PM. Because all samples end at the same time (MX column in the next picture), each of them count one record less.

You can run the following select that show you the min and max timestamp

select * from (

select

sample_id

, session_id

, session_serial#

, time_waited

, sample_time

, inserting_time

, max(inserting_time) over (partition by sample_id) mx

, count(*) over (partition by sample_id) cnt

, row_number() over (partition by sample_id order by inserting_time) rn_min

, row_number() over (partition by sample_id order by inserting_time desc) rn_max

from

my_active_session_history

where

session_id=1732 and session_serial#=31065

order by

session_id

, sample_id)

where rn_min=1 or rn_max=1

/

|

| Click for enlarge |

Please, pay attention. While the inserting_time is the instant when the row is inserted in the MY_ACTIVE_SESSION_HISTORY, the sampling happens each second (in the previous picture I put both SAMPLE_TIME and INSERTING_TIME)